Bidirectional LSTM Explained: Architecture, Forward-Backward Pass & Practical Tutorial

Modern deep learning tasks often require understanding context from both past and future — and that’s exactly what a Bidirectional LSTM (BiLSTM) does best. While a standard LSTM processes a sequence forward in time, a Bidirectional LSTM processes it both ways to capture dependencies that simple unidirectional models would miss.

If you’re building models for NLP, speech recognition, or time series forecasting, adding a Bidirectional LSTM can dramatically improve performance.

This advanced tutorial covers:

✅ How Bidirectional LSTM works

✅ The full forward-backward architecture

✅ Mathematical flow for both directions

✅ Keras and TensorFlow implementation examples

✅ NLP and real-world use cases

✅ Tips for tuning and extending BiLSTMs

🔍 What is a Bidirectional LSTM?

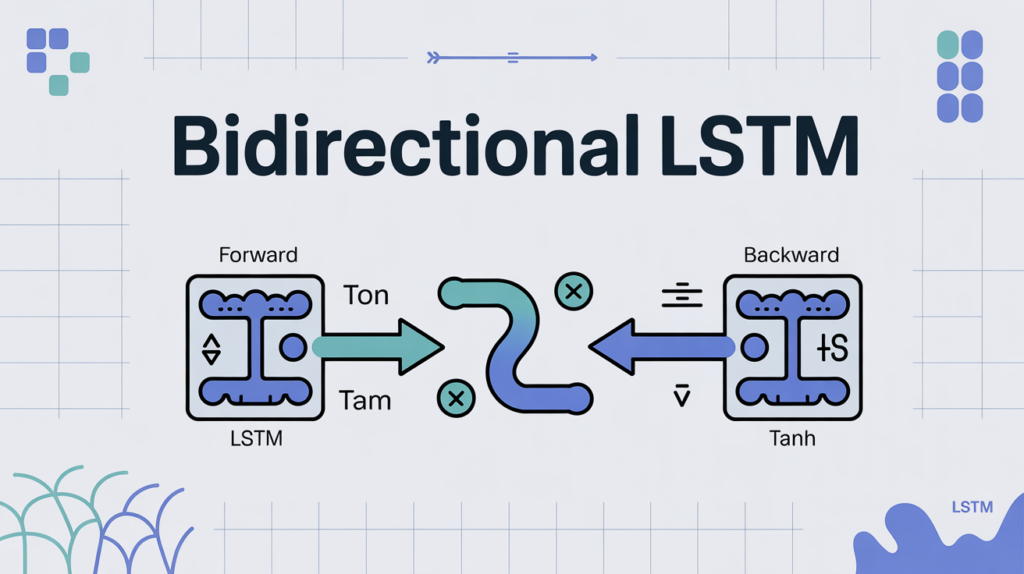

A Bidirectional LSTM combines two LSTMs:

- Forward LSTM: processes the sequence as usual, from start to end.

- Backward LSTM: processes the same sequence in reverse, from end to start.

Both hidden states are then merged (via concatenation or sum) before being fed to the next layer or used for output.

✅ Key benefit: By seeing both past and future context for each time step, BiLSTMs are powerful for tasks where surrounding words or frames matter — like sentiment analysis or named entity recognition.

🗂️ Why Use Bidirectional LSTM?

🔑 Contextual Power: A word like “lead” could mean metal or a verb depending on both previous and next words. BiLSTM fixes this.

✅ Improved Accuracy: In NLP benchmarks, adding bidirectionality almost always improves performance for sequence labeling tasks.

📊 Versatile: Works for text, audio, DNA sequences — any ordered data where both directions carry meaning.

⚙️ Bidirectional LSTM Architecture

A BiLSTM layer wraps two LSTM layers:

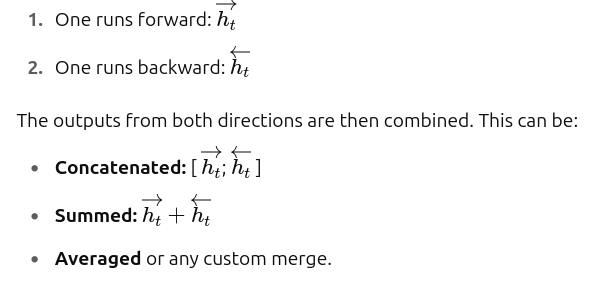

🔄 How Bidirectional LSTM Processes a Sequence

✅ Step-by-Step:

- Input sequence:

[x₁, x₂, ..., xₙ] - Forward LSTM processes:

x₁ → x₂ → ... → xₙ - Backward LSTM processes:

xₙ → xₙ₋₁ → ... → x₁ - At each time step

t, merge\(\overrightarrow{h_t}\)and\(\overleftarrow{h_t}\)

✅ Result: Every output has information from both directions.

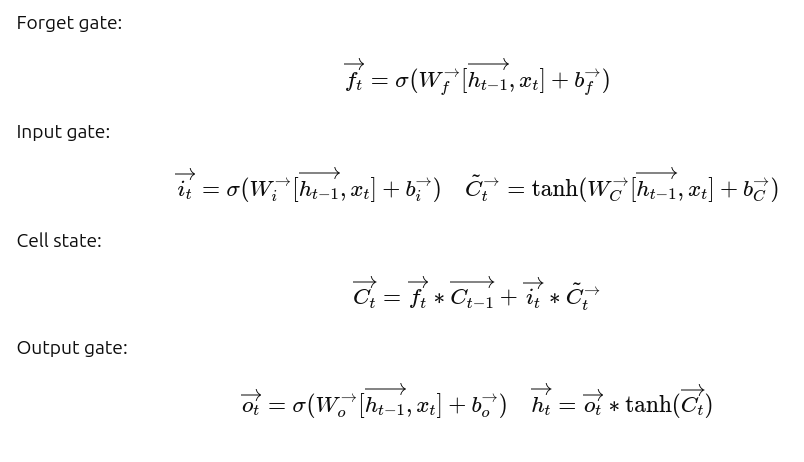

🧬 Mathematics Behind Bidirectional LSTM

Let’s see the core math.

At time step t:

➡️ Forward LSTM

⬅️ Backward LSTM

Runs exactly the same, but on the reversed input:

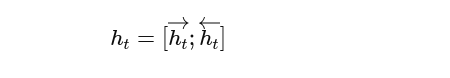

🔗 Merge Both Directions

The final output is:

🧩 Practical Use Cases for Bidirectional LSTM

✅ Named Entity Recognition (NER):

Knowing the entire sentence helps label words correctly.

✅ Sentiment Analysis:

Captures phrases like “not bad” or “wasn’t great” that require context from both sides.

✅ Speech Recognition:

When you have the entire audio segment available, bidirectionality boosts accuracy.

✅ DNA Sequence Analysis:

Biological data often has dependencies in both directions.

✅ Time Series Anomaly Detection:

If future data is known, you can detect anomalies with better precision.

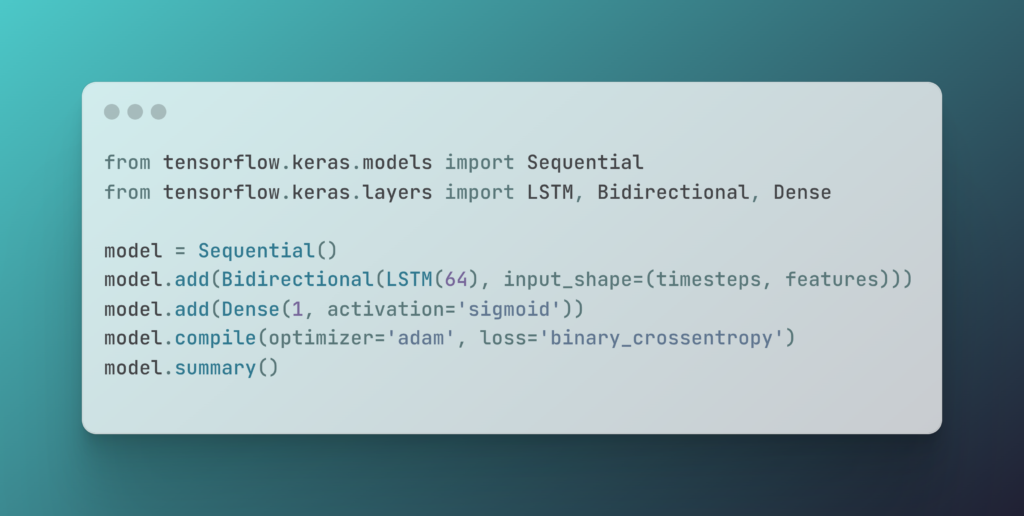

🖥️ Bidirectional LSTM in Keras

✅ Key point: The Bidirectional wrapper handles both directions for you!

🔍 Tips for Training Bidirectional LSTMs

✔️ Batch Processing: Training bidirectional layers needs double memory — plan your batch size accordingly.

✔️ Dropout: Helps regularize the model and prevent overfitting.

✔️ Attention Mechanisms: Combine BiLSTM with attention for even better performance.

✔️ Stacking Layers: You can stack multiple Bidirectional LSTMs for deeper context.

⚖️ Bidirectional LSTM vs Unidirectional LSTM

| Feature | Unidirectional LSTM | Bidirectional LSTM |

|---|---|---|

| Context | Past only | Past & future |

| Accuracy | Good | Often better |

| Use Case | Streaming | Offline sequence tasks |

| Speed | Faster | Slower (more compute) |

| Applications | Real-time | Full context available |

✅ Key takeaway: If you need real-time predictions, bidirectionality might not work. For offline tasks, it’s gold.

⚙️ Advanced Variants

Once you master BiLSTM, explore:

- Stacked Bidirectional LSTM: Multiple layers for deeper learning.

- Attention-Based BiLSTM: Weighs the importance of time steps.

- Grid LSTM: Handles multi-dimensional data.

- Peephole LSTM: Adds direct cell access to gates.

🌍 Useful Links

- Colah’s Blog: Understanding LSTMs

- TensorFlow: Bidirectional Wrapper

❓ FAQs About Bidirectional LSTM

1. When should I use Bidirectional LSTM?

Whenever the whole sequence is available up front — NLP, offline audio, DNA, etc.

2. Is it always better than normal LSTM?

Not for streaming or real-time tasks. But for sequence labeling, yes.

3. Does it double training time?

It doubles computation for the LSTM layer but not always total training time.

4. Can you combine BiLSTM with attention?

Absolutely. This is standard in advanced NLP.

5. How do you visualize both directions?

Plot forward and backward hidden states to debug learning patterns.

📚 Check Out Our Other Python Installation Guides

If you’re working with Python, don’t miss our other detailed tutorials to help you get your environment set up smoothly:

- ✅ Installing Different Python Versions: Learn step-by-step how to manage multiple Python versions on your system.

Links:

📌 Python 3.13 installation guide (latest)

📌 Python 3.10 installation easy and beginner guide

Discover more from Neural Brain Works - The Tech blog

Subscribe to get the latest posts sent to your email.