ChatGPT said:

LSTM Attention Mechanism: A Complete Guide to Understanding, Implementing & Visualizing Attention in Deep Learning

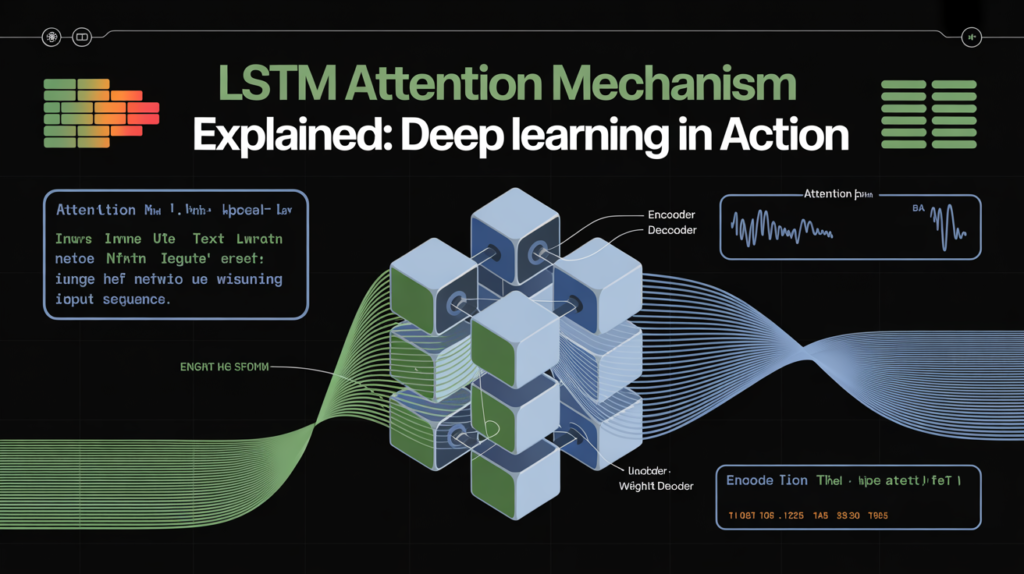

The concept of attention mechanisms revolutionized deep learning, particularly in Natural Language Processing (NLP). When combined with Long Short-Term Memory (LSTM) networks, attention helps models focus on the most relevant parts of an input sequence. This makes LSTM models more interpretable, powerful, and accurate—especially for complex tasks like translation, summarization, and question answering.

In this comprehensive guide, we’ll break down the LSTM attention mechanism from theory to implementation using Python, TensorFlow, and Keras. You’ll learn about Bahdanau and Luong attention, context vectors, attention weights, and how to visualize what your model is “looking at” during predictions.

What Is an LSTM Attention Mechanism?

An attention mechanism in an LSTM model allows the network to selectively focus on different parts of the input sequence when generating each output token. Instead of treating all time steps equally, attention assigns weights based on relevance.

In encoder-decoder setups:

- The encoder processes the input sequence into hidden states.

- The decoder uses attention to “look back” at those states and focus on the most relevant ones.

This significantly improves model performance for tasks involving long or complex sequences.

Why Use Attention with LSTM?

LSTMs alone are powerful, but they struggle with long-term dependencies when sequences get very long. Attention mechanisms solve this by:

- Allowing direct access to all encoder outputs at each decoding step

- Improving context-awareness in sequence generation

- Enhancing model interpretability

- Reducing reliance on memory cells alone

Applications where LSTM attention mechanisms shine:

- Machine translation

- Text summarization

- Named Entity Recognition (NER)

- Dialogue systems

- Sentiment analysis with long reviews

Bahdanau Attention vs. Luong Attention: What’s the Difference?

Two of the most widely used attention types in LSTM models are:

Bahdanau Attention (Additive)

- Introduced in 2014 by Bahdanau et al.

- Calculates attention scores using a feedforward network

- Suitable when you want more flexibility in learning alignment

Luong Attention (Multiplicative)

- More efficient, introduced by Luong et al. in 2015

- Uses dot product or scaled dot product between decoder and encoder outputs

| Feature | Bahdanau Attention | Luong Attention |

|---|---|---|

| Score function | Additive (learned) | Dot product / scaled |

| Complexity | Higher | Lower |

| Use Case | General NLP tasks | Efficient decoding |

How LSTM Attention Works: The Architecture

At a high level, attention involves:

- Encoder LSTM processes input sequence and outputs hidden states.

- Decoder LSTM generates output step-by-step.

- For each decoding step:

- Calculate attention scores for all encoder outputs.

- Apply softmax to get attention weights.

- Generate a context vector as the weighted sum of encoder outputs.

- Use this vector with the decoder state to produce the next word/token.

This dynamic focus allows the decoder to “pay attention” to different parts of the input as needed.

LSTM Attention Mechanism in Python (Keras)

Let’s walk through a simple LSTM attention mechanism implementation.

Step 1: Define Custom Attention Layer

from tensorflow.keras.layers import Layer

import tensorflow.keras.backend as K

class AttentionLayer(Layer):

def __init__(self, **kwargs):

super(AttentionLayer, self).__init__(**kwargs)

def call(self, inputs):

encoder_output, decoder_output = inputs

score = K.batch_dot(decoder_output, encoder_output, axes=[2, 2])

attention_weights = K.softmax(score, axis=1)

context_vector = K.batch_dot(attention_weights, encoder_output, axes=[2, 1])

return context_vectorStep 2: Integrate into an Encoder-Decoder Model

Combine encoder LSTM, decoder LSTM, and attention layer. Use the context vector along with the decoder’s output at each step.

Note: You can also use libraries like tensorflow_addons.seq2seq for easier attention integration.

Visualizing Attention Weights

One of the most powerful features of attention is that it’s interpretable.

import seaborn as sns

import matplotlib.pyplot as plt

sns.heatmap(attention_weights[0], cmap='viridis')

plt.xlabel("Input Sequence")

plt.ylabel("Output Sequence")

plt.title("Attention Heatmap")

plt.show()This helps you understand:

- Which input words the model focused on for each output

- If attention aligns with human expectations

- How to debug or improve model training

Self-Attention vs. Encoder-Decoder Attention

While LSTM attention mechanisms typically involve encoder-decoder architectures, transformers introduced self-attention, where each word attends to every other word in the same sequence.

| Type | Description |

|---|---|

| Encoder-Decoder | Decoder attends to encoder’s output |

| Self-Attention | Every word attends to all others in input |

| Multi-head Attention | Multiple self-attention heads combined |

For LSTM models, we usually stick with encoder-decoder attention or additive/dot-product attention for interpretability and simplicity.

Using Attention in NLP Applications

You can use LSTM attention mechanism in NLP tasks such as:

- Text summarization: Helps focus on key sentences or words.

- Machine translation: Translates based on context from different parts of the sentence.

- Sentiment analysis: Focuses on sentiment-heavy words.

- NER (Named Entity Recognition): Identifies context-sensitive entities better.

Pretrained embeddings like GloVe or FastText can be used alongside attention to boost accuracy.

Multi-Head and Scaled Dot-Product Attention

These are advanced attention mechanisms typically used in transformers, but elements can be borrowed for LSTM models too.

- Scaled Dot-Product Attention: Scales the dot product to prevent softmax saturation.

- Multi-Head Attention: Splits inputs into multiple heads for parallel attention, then concatenates outputs.

While computationally heavier, these mechanisms improve attention resolution.

Adding Interpretability to LSTM Attention Models

Attention inherently offers interpretability. But you can further enhance it using:

- Alignment scores visualization

- Attention heatmaps for each prediction

- Context vector tracking

- LIME or SHAP for understanding how features contribute

This is crucial for domains like healthcare, finance, or legal NLP, where transparency is key.

Best Practices for LSTM Attention Mechanism Implementation

- Use masking for padded sequences to avoid attention on padding tokens.

- Normalize input sequences to stabilize training.

- Use Bidirectional LSTM for better context in the encoder.

- Apply dropout and regularization to avoid overfitting.

- Monitor attention scores during training for debugging.

Real-World Examples of LSTM Attention Mechanisms

1. Neural Machine Translation

Google’s original NMT used attention to improve fluency and accuracy.

2. Legal Document Summarization

Focuses on important clauses using attention-guided summarization.

3. Biomedical NLP

Used in disease prediction models to identify influential symptoms or notes.

4. Chatbots & QA Systems

Allows chatbots to understand context and give relevant answers.

Conclusion

The LSTM attention mechanism brings a new level of performance and interpretability to NLP models. By focusing on relevant parts of the input, attention improves accuracy, handles longer sequences, and makes models more explainable.

Whether you’re building a translation tool, a text summarizer, or an emotion classifier, adding attention to your LSTM model can significantly enhance both its learning capacity and trustworthiness.

From Bahdanau and Luong attention to visualization and multi-head extensions, mastering attention is essential for any deep learning practitioner today.

FAQs

1. What’s the difference between Bahdanau and Luong attention?

Bahdanau uses additive attention (learns alignment via MLP), while Luong uses dot-product (simpler, faster). Both work well, depending on your task.

2. Can I use attention with Bidirectional LSTM?

Absolutely. It improves encoder context and usually boosts performance.

3. Is attention limited to LSTM models?

No. It’s widely used in transformers and CNNs too, but LSTM + attention is still popular for many sequence tasks.

4. How do I visualize LSTM attention?

Use heatmaps of attention weights per prediction. Libraries like Seaborn and Matplotlib are great for this.

5. Is attention interpretable?

Yes. That’s one of its key strengths—it shows what the model is focusing on for each prediction.

Discover more from Neural Brain Works - The Tech blog

Subscribe to get the latest posts sent to your email.