LSTM Model Deployment: A Comprehensive Guide to Deploying LSTM Models in Production

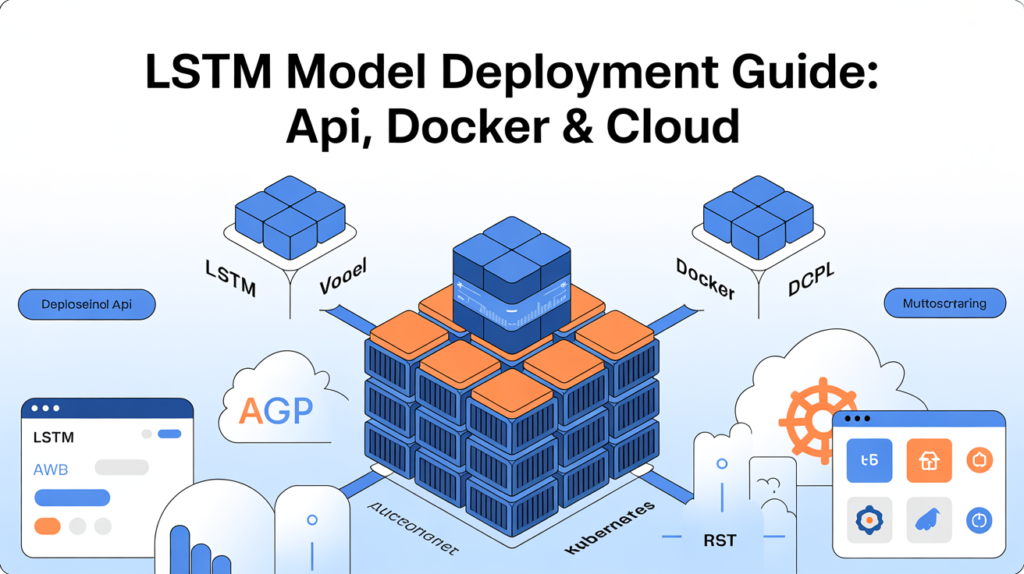

Deploying LSTM models is the final—and critical—step in bringing deep learning from development to production. Whether you’re creating an API endpoint, containerizing your model, or scaling in the cloud, effective lstm model deployment ensures reliability, scalability, and efficiency. In this deployment guide, we’ll explore deployment best practices—from REST APIs and Docker to Kubernetes and real-time monitoring—along with code examples and architectural diagrams.

Why is LSTM Model Deployment Crucial?

Before diving into deployment strategies, it’s essential to understand why lstm model deployment matters:

- Real-time inference needs: Many LSTM use cases—like time series forecasting or NLP—require low-latency predictions.

- Scalability: Production environments demand horizontal scaling, load balancing, and fault tolerance.

- Maintainability: Models must be versioned and maintained as new data or models arrive.

- Monitoring: You must continuously track model performance and infrastructure health.

Failing to deploy models correctly can lead to unreliable behavior, poor user experiences, and technical debt. Let’s explore deployment steps in detail.

1. Model Serialization and Packaging

Saving and Loading LSTM Models Correctly

- Use standard APIs:

model.save('lstm_model.h5')ormodel.save_weights(). - For TensorFlow SavedModel format:

model.save('saved_model_dir')- This ensures portability across platforms (Flask, TensorFlow Serving, SageMaker).

Package Model with Preprocessing Logic

Include tokenizers, scalers, or feature pipelines alongside your trained model. Better yet, wrap them in a standardized deployment package to reduce mismatch when serving predictions.

2. Building REST APIs for LSTM Model Deployment

Deploy LSTM Models with Flask or FastAPI

Flask Example:

from flask import Flask, request, jsonify

import tensorflow as tf

app = Flask(__name__)

model = tf.keras.models.load_model('lstm_model.h5')

@app.route('/predict', methods=['POST'])

def predict():

data = request.json['sequence']

prediction = model.predict([data])

return jsonify({'prediction': prediction.tolist()})FastAPI Example:

from fastapi import FastAPI

import uvicorn

app = FastAPI()

model = tf.keras.models.load_model('lstm_model.h5')

@app.post('/predict')

async def predict(sequence: List[float]):

pred = model.predict([sequence])

return {'prediction': pred.tolist()}

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)These simple deployment APIs enable lstm model deployment python workflows for production-level inference.

3. Docker Containerization for LSTM Deployment

Dockerizing LSTM Model APIs

Use Docker to create standardized, portable environments for your LSTM inference service.

Dockerfile Example:

FROM python:3.9-slim

WORKDIR /app

COPY . /app

RUN pip install tensorflow flask

CMD ["python", "app.py"]Build and run:

docker build -t lstm-api .

docker run -d -p 5000:5000 lstm-apiContainerization ensures consistent deployment across environments and simplifies scaling and orchestration.

4. Deploying with TensorFlow Serving

For production-grade inference, use TensorFlow Serving:

tensorflow_model_server \

--model_name=lstm_model \

--port=8500 \

--rest_api_port=8501 \

--model_base_path=/models/lstm_modelTensorFlow Serving allows high-performance inference and allows multiple versions (model versioning) to coexist seamlessly.

5. Cloud Deployment Strategies and Scalability

Deploy on AWS, GCP, or Azure Cloud

- AWS SageMaker or Google AI Platform provide managed endpoints for lstm model deployment cloud.

- Autonomous scaling detects load and spins up inference instances.

- Use edge deployment via AWS Greengrass or Azure IoT Edge for real-time prediction near data sources.

Kubernetes Orchestration

Use Kubernetes to orchestrate multiple containers running LSTM inference:

- Use deployments and services to expose REST APIs,

- Include load balancing across pods,

- Enable A/B testing and canary rollouts for different model versions.

6. Scaling, Monitoring, and MLOps Integration

Scaling Strategies

- Horizontal scaling by adding more inference pods or instances.

- Use load balancers (NGINX or cloud provider).

- Use autoscaling based on request rates or CPU/GPU metrics.

Model Versioning & A/B Testing

- Maintain multiple model versions for shadow deployments.

- Route production traffic to model_v1 while testing model_v2 with limited traffic.

Monitoring Systems

- Log latency, request throughput, error rates.

- Monitor model drift or performance decay using drift detection tools.

- Use systems like Prometheus, Grafana, or CloudWatch for dashboards and alerts.

7. Edge Deployment and Real-Time Inference

Deploying LSTM to Edge Devices

Edge deployment involves running LSTM inference on constrained hardware (e.g., Raspberry Pi, mobile, embedded systems):

- Use TensorFlow Lite or ONNX for model conversion and quantization.

- Optimize your LSTM for memory and compute (small model, quantized weights).

- Ideal for real-time sentiment analysis, anomaly detection, or predictive maintenance.

8. Pipeline Sample: LSTM Serialization → API → Docker → Kubernetes

Here’s a step-by-step deployment pipeline:

- Train and save LSTM model as

saved_model_dir. - Wrap inference logic in REST API (Flask/FastAPI).

- Dockerize API with dependencies and model assets.

- Push Docker image to registry (e.g., DockerHub or AWS ECR).

- Deploy on Kubernetes with autoscaling and load balancing.

- Expose service through Ingress Controller.

- Integrate monitoring pipelines with Prometheus and alerting.

This approach ensures your lstm model deployment production environment is scalable, maintainable, and secure.

9. Best Practices for LSTM Model Deployment

- Ensure model serialization is consistent across dev and prod.

- Enable secure APIs with authentication and rate limiting.

- Use model versioning to enable rollbacks and A/B testing.

- Include health-check endpoints in your API for load balancers.

- Implement logging and auditing for inference requests and model decisions.

- Use batch inference pipelines for large-scale predictions (e.g., ETL jobs).

Conclusion

LSTM model deployment brings your predictive models into the real world. Whether you’re exposing REST APIs with Flask, containerizing via Docker, leveraging TensorFlow Serving, or deploying on Kubernetes in the cloud—each step ensures scalability, reliability, and production readiness. From deployment best practices to real-time edge use cases and monitoring pipelines, mastering LSTM deployment is essential for ML practitioners in 2025 and beyond.

By following a structured pipeline—from model serialization to scalable serving—and incorporating MLOps principles (versioning, monitoring, A/B testing)—you’ll build robust, production-grade LSTM systems ready for real-world impact.

FAQs

1. What’s the best deployment method for LSTM in production?

It depends on requirements: Flask/FastAPI for simple services; Docker + Kubernetes for scalable, production-ready systems; TensorFlow Serving for optimized inference.

2. Can LSTM models be deployed in real-time edge environments?

Yes, by converting them to lightweight formats like TensorFlow Lite and deploying on edge hardware.

3. How do I version LSTM models in deployment?

Track serialized model files, use REST APIs with versioned endpoints, or enable model versioning in TensorFlow Serving.

4. How can I monitor deployed LSTM models?

Use tools like Prometheus, Grafana, CloudWatch to track latency, throughput, error rates, and model drift.

5. Is containerization necessary for LSTM deployment?

While not always necessary, containerization (Docker) greatly improves portability, reproducibility, and orchestration.

Discover more from Neural Brain Works - The Tech blog

Subscribe to get the latest posts sent to your email.