LSTM Seq2Seq: A Complete Guide to Sequence-to-Sequence Models with Python

Sequence-to-Sequence (Seq2Seq) models are the backbone of many Natural Language Processing (NLP) applications, from machine translation to text summarization and dialogue generation. Among these, LSTM Seq2Seq models stand out for their ability to handle variable-length inputs and outputs using memory-aware architecture.

In this advanced tutorial, you’ll learn how to build, train, and optimize LSTM-based Seq2Seq models using Python, Keras, and TensorFlow. We’ll cover encoder-decoder architecture, teacher forcing, attention mechanisms, and evaluation using BLEU scores. By the end, you’ll be equipped to tackle real-world NLP tasks with confidence.

What is an LSTM Seq2Seq Model?

An LSTM Seq2Seq model is a deep learning architecture that maps a variable-length input sequence to a variable-length output sequence using two main components:

- Encoder: Reads and compresses the input sequence into a context vector.

- Decoder: Uses that context vector to generate the output sequence one token at a time.

This architecture is ideal for tasks like:

- Machine translation

- Text summarization

- Question answering

- Chatbots

- Code generation

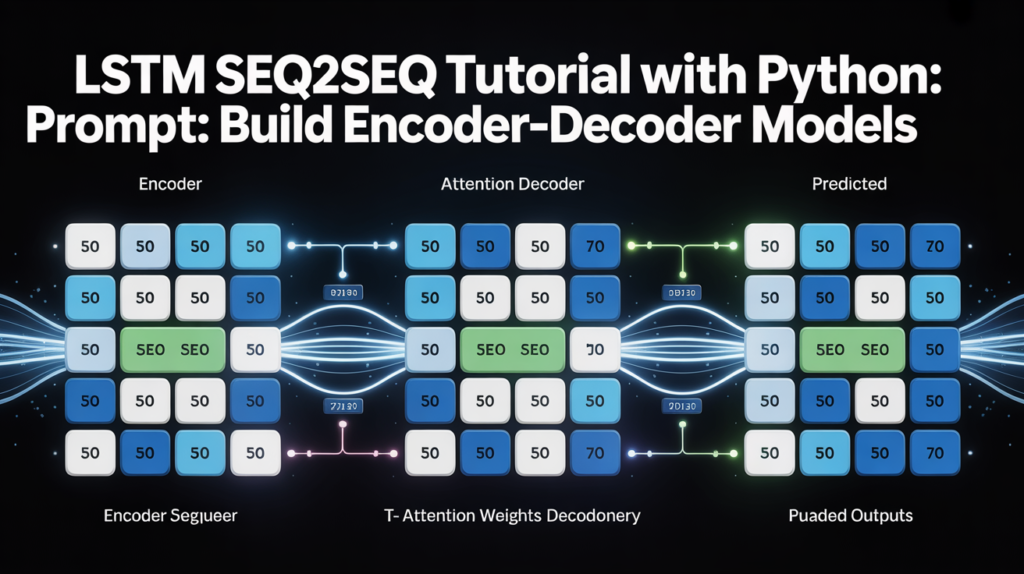

How the Encoder-Decoder Architecture Works

Here’s a simplified breakdown of the flow:

- Encoder LSTM: Processes the input sequence and returns a fixed-length hidden state.

- Context Vector: Captures the essence of the input.

- Decoder LSTM: Uses the context vector to generate output step-by-step.

- Output Layer: Generates predictions token by token using a softmax layer.

This structure can handle inputs and outputs of varying lengths, making it perfect for NLP and time series modeling.

LSTM Seq2Seq Model in Python (Keras/TensorFlow)

Let’s build a basic LSTM Seq2Seq implementation using Keras.

Step 1: Import Required Libraries

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, LSTM, Dense

import numpy as npStep 2: Define the Encoder

encoder_inputs = Input(shape=(None, num_encoder_tokens))

encoder_lstm = LSTM(256, return_state=True)

encoder_outputs, state_h, state_c = encoder_lstm(encoder_inputs)

encoder_states = [state_h, state_c]Step 3: Define the Decoder

decoder_inputs = Input(shape=(None, num_decoder_tokens))

decoder_lstm = LSTM(256, return_sequences=True, return_state=True)

decoder_outputs, _, _ = decoder_lstm(decoder_inputs, initial_state=encoder_states)

decoder_dense = Dense(num_decoder_tokens, activation='softmax')

decoder_outputs = decoder_dense(decoder_outputs)Step 4: Compile the Model

model = Model([encoder_inputs, decoder_inputs], decoder_outputs)

model.compile(optimizer='adam', loss='categorical_crossentropy')Understanding Teacher Forcing

Teacher forcing is a training technique where the actual output from the previous time step is fed as input to the decoder during training instead of the model’s own prediction. This accelerates convergence and stabilizes training.

However, during inference, teacher forcing is not used, which can lead to exposure bias. Strategies like scheduled sampling or beam search help mitigate this.

Handling Variable-Length Sequences and Padding

Since input and output sequences can vary in length, we need to:

- Pad sequences to the same length using

pad_sequences() - Mask the loss function to ignore padded tokens during training

from tensorflow.keras.preprocessing.sequence import pad_sequences

padded_input = pad_sequences(input_sequences, padding='post')Use mask_zero=True in the Embedding layer and configure loss masking accordingly.

Integrating Attention with LSTM Seq2Seq

Attention enhances the model by allowing the decoder to access all encoder hidden states at every time step instead of relying on a single context vector.

You can use:

- Bahdanau Attention (Additive)

- Luong Attention (Multiplicative)

Libraries like tensorflow_addons.seq2seq simplify this integration.

# Simplified example

context_vector, attention_weights = Attention(query, value)Attention improves translation quality, especially for longer sequences.

Inference Strategy for LSTM Seq2Seq

During inference:

- Encode the input sequence

- Initialize decoder with encoder states

- Generate tokens one by one using decoder’s output as input for next time step

This step-by-step prediction continues until the <eos> (end-of-sequence) token is generated or the max length is reached.

Beam Search for Better Predictions

Instead of greedy decoding, use beam search to explore multiple likely sequences and pick the best one based on cumulative probability.

Beam search significantly improves output fluency, especially in machine translation and abstractive summarization tasks.

# Pseudo-code

for beam in beams:

extend_beam()

rank_by_score()Set a beam width (e.g., 5–10) to balance quality and computational cost.

Evaluating LSTM Seq2Seq Models with BLEU Score

The BLEU (Bilingual Evaluation Understudy) score is a standard metric for evaluating translation and text generation quality.

from nltk.translate.bleu_score import sentence_bleu

reference = [['this', 'is', 'a', 'test']]

candidate = ['this', 'is', 'test']

bleu_score = sentence_bleu(reference, candidate)BLEU evaluates how similar your generated sequence is to the reference translation, considering n-gram overlaps.

Use Cases of LSTM Seq2Seq Models

1. Machine Translation

Translate sentences from one language to another (e.g., English → French).

2. Abstractive Summarization

Generate concise summaries that don’t just extract but rephrase content.

3. Dialogue Generation

Power chatbots and virtual assistants to respond intelligently.

4. Code Generation

Translate natural language queries into code snippets or SQL queries.

These applications benefit from attention-enhanced Seq2Seq models for improved accuracy and contextual understanding.

Best Practices for Training LSTM Seq2Seq Models

- Use dropout and recurrent dropout to prevent overfitting.

- Apply gradient clipping to handle exploding gradients.

- Experiment with learning rate schedulers.

- Start with pre-trained embeddings (e.g., GloVe, FastText).

- Monitor loss curves and BLEU scores for performance.

Conclusion

LSTM Seq2Seq models are a foundational tool for modern NLP applications. With an encoder-decoder architecture enhanced by attention mechanisms, these models handle variable-length input and output with ease, making them ideal for machine translation, summarization, and more.

Whether you’re building your first translation engine or deploying an intelligent chatbot, mastering LSTM Seq2Seq will set you apart as a deep learning practitioner. Focus on implementing attention, using teacher forcing, optimizing inference, and evaluating results with BLEU for best results.

FAQs

1. What is an LSTM Seq2Seq model used for?

It’s used in NLP tasks like translation, summarization, and dialogue generation, where both input and output are sequences.

2. Why do we use attention in Seq2Seq models?

Attention improves performance by letting the decoder focus on different parts of the input sequence at each time step.

3. What is teacher forcing in LSTM models?

It’s a technique where the actual output is fed into the decoder during training to speed up learning.

4. How is inference different from training in Seq2Seq?

During inference, outputs are generated one at a time using the model’s own predictions instead of actual labels.

5. How do I evaluate a Seq2Seq model?

Use BLEU scores for translation tasks and monitor loss and accuracy during training. Also consider manual inspection of outputs.

Discover more from Neural Brain Works - The Tech blog

Subscribe to get the latest posts sent to your email.