LSTM Time Series Forecasting: How to Predict Sequential Data

When you’re dealing with patterns that unfold over time — stock prices, energy demand, patient vitals — time series forecasting becomes mission-critical. While classic models like ARIMA have their place, they often struggle with complex patterns and long-term dependencies.

This is where LSTM time series forecasting stands out. In this guide, you’ll learn what LSTM time series models are, why they’re so powerful, how to build your own, and see practical examples to inspire your next project.

🚀 Why Time Series Forecasting Matters

Sequential data is at the heart of real-world decision-making. Here’s why it matters:

- 📈 Finance: Traders rely on accurate forecasts to identify trends and time the market.

- 🏭 Manufacturing: Predictive maintenance helps companies avoid costly machine downtime.

- 🛒 Retail: Accurate demand forecasting means better inventory management and higher profits.

- 🌦️ Weather & Environment: Weather models rely on robust sequential predictions to keep people safe.

Traditional statistical models like ARIMA or Exponential Smoothing work for simple patterns. But they fall short when the data is non-linear, highly seasonal, or influenced by hidden factors. That’s where deep learning, specifically LSTM time series forecasting, takes the lead.

🧠 A Quick Recap: What is an LSTM?

An LSTM (Long Short-Term Memory) network is a type of Recurrent Neural Network (RNN) designed to tackle the limitations of traditional RNNs — specifically the vanishing gradient problem that makes learning long-term dependencies difficult.

In an LSTM cell, three gates — input, output, and forget gates — decide what information to store, what to discard, and what to output. This gives LSTM models a powerful ability to remember important signals far into the past, making them perfect for sequential data prediction.

🔍 How LSTM Time Series Forecasting Works

So, how does LSTM time series forecasting actually make predictions?

- Step 1: Data is fed in sequences (sliding windows of past values).

- Step 2: The LSTM’s memory cell processes these sequences, storing relevant info and forgetting noise.

- Step 3: The output gate generates the prediction — whether it’s tomorrow’s stock price, next month’s energy demand, or the next value in a pattern.

Unlike feedforward networks, the LSTM’s internal memory helps it learn context and trends over time.

🛠️ Data Preprocessing — The Unsung Hero

A solid lstm time series prediction project always starts with smart preprocessing. Here’s what to do:

✅ Visualize & Check Stationarity: Use plots and the Augmented Dickey-Fuller test. If data isn’t stationary, try differencing.

✅ Handle Missing Values: Fill gaps using interpolation, forward/backward fill, or domain-specific methods.

✅ Scale or Normalize: LSTMs work best when data is scaled to a similar range (e.g., 0–1).

✅ Create Sequences: Transform raw data into overlapping windows. For example, use the past 30 days of sales data to predict the next day’s sales.

✅ Split Data: Always split into training, validation, and test sets chronologically.

📊 LSTM Time Series Example: Real-World Scenarios

To make this concrete, here are some lstm time series examples in action:

📈 Stock Price Prediction

Feed in daily closing prices. The model learns hidden trends and outputs tomorrow’s price.

🌦️ Weather Forecasting

Use multiple features — temperature, humidity, wind speed — to make short-term weather predictions.

⚡ Energy Demand Forecasting

Predict electricity usage spikes to optimize grid resources.

🏥 Healthcare Monitoring

Monitor patient vitals for anomalies. LSTMs detect subtle patterns that rule-based systems might miss.

🚚 Logistics & Supply Chain

Forecast demand and delivery times to streamline operations.

🔑 Benefits of Using LSTM for Time Series

- Captures long-term dependencies that classic models miss.

- Handles non-linear and complex patterns.

- Flexible for multivariate forecasting (multiple input variables).

- Adaptable to various domains.

⚠️ Challenges & Best Practices

While powerful, LSTM time series models come with pitfalls:

- 🧩 Data Hungry: Needs large datasets to learn well.

- ⏱️ Training Time: Computationally intensive, especially with big data.

- 🎯 Overfitting: Easily overfits if not tuned properly.

✅ Pro Tips:

- Tune sequence length thoughtfully.

- Use dropout layers to reduce overfitting.

- Try bidirectional LSTMs for richer context.

- Re-train regularly — time series data drifts!

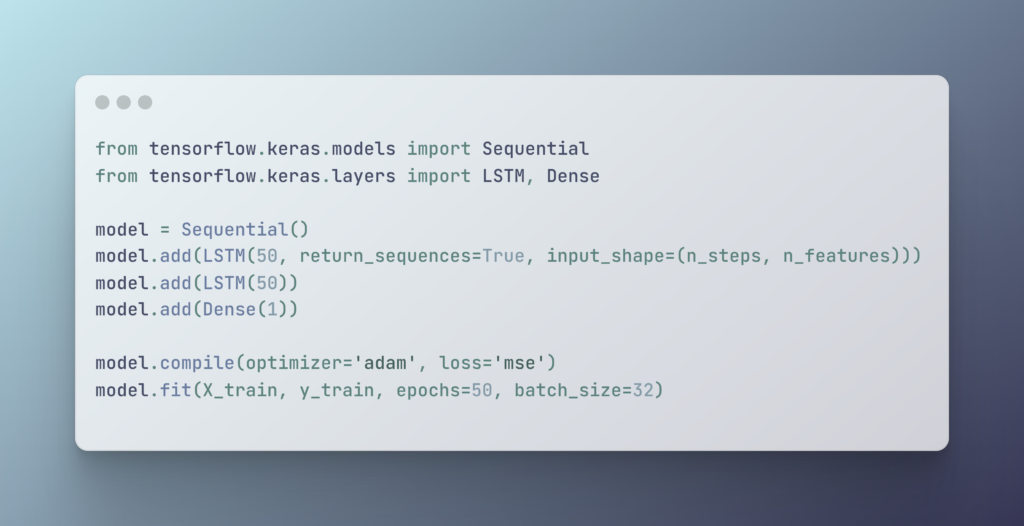

💻 Quick Practical Code Example

📌 This simple architecture works well for many beginner projects. Add callbacks, validation, and tuning for production.

🧩 Get Started: Check Out These Python Setup Guides

Working with LSTM models means your Python environment must be rock solid.

✅ Read our Latest Version of Python installation blog – easy and quick guide

🌐 Useful Resources

- Check practical examples on TensorFlow.org.

- Learn more about advanced forecasting on Towards Data Science.

🙋♀️ FAQs About LSTM Time Series

1. What is LSTM time series forecasting?

Using LSTM networks to predict future points in sequential data by learning patterns over time.

2. Why choose LSTM for time series prediction?

They capture complex, non-linear patterns and handle long-term dependencies better than traditional models.

3. Can I use LSTM for multivariate time series?

Absolutely! LSTMs handle multiple features, improving accuracy.

4. Is stationarity required for LSTM models?

Not strictly, but making your data more stable can help training.

5. How does LSTM compare to ARIMA?

ARIMA works well for simple, linear patterns; LSTM handles complex, non-linear ones with less manual feature engineering.

Discover more from Neural Brain Works - The Tech blog

Subscribe to get the latest posts sent to your email.